Configuring the OrangePi cluster

The first boot comes with the SIM card.

I flashed the Debian server downloaded from the official site on a new SIM. The plugged into the SIM slot and pressed the power button.

On the first boot updating APT is a good idea.

sudo apt update

sudo apt dist-upgradeThe SIM card was ok but performances are way different if we move the OS into the M.2 NVME SSD.

Booting from the SSD

First we need to partition the SSD:

sudo gdisk /dev/nvme0n1 A new disk might come with other partitions already there and it’s important to delete them all before creating one for the OS.

- “d” for delete. I had to delete partition from 1 to 8.

- “w” for writing. To save the changes.

- “n” for a new partition. Confirm all default values.

Not it’s time to install the boot disk on the SSD.

sudo orangepi-configChoose “system” and then choose “install boot fro SPI”.

The process takes few minutes.

Once it’s over you can power down the OrangePi, extract the SIM card and power the machine again. You should experience a way faster boot process respect to the one with the SIM card.

Network

First I configured the host name for every Orange Pi.

sudo nano /etc/hostnameI chose an incremental naming from orange-1 to orange-5.

orange-#The I wanted to specify a static IP to be sure the master-node of the cluster will now where to find them.

sudo nano /etc/network/interfacesauto eth0

iface eth0 inet static

address 10.0.0.101

netmask 255.255.255.0

gateway 10.0.0.1To restart the service:

sudo systemctl restart NetworkManager.serviceIt’s important to make sure all the machine composing the cluster have the same timezone, otherwise the Slurm controller will not work properly:

sudo ln -sf /usr/share/zoneinfo/Europe/Madrid /etc/localtime[Optional] I also wanted to be sure my internal DNS would be interrogated first.

sudo nano /etc/resolv.confnameserver 10.0.0.20

nameserver 10.0.0.1And restart the service:

sudo systemctl restart resolvconf.serviceSingularity

This is a personal choice that I did to reduce the configuration time of every node.

From the official website: “Singularity is a widely-adopted container runtime that implements a unique security model to mitigate privilege escalation risks and provides a platform to capture a complete application environment into a single file (SIF)“

It allows me to generate a single Docker container that holds all the software needed for a specific project.

Singularity allows for higher safety than running a Docker instance on every Slurm node. There’s no running instance of the server and the container runs only in user space without requiring root permission.

The installation process requires first to install GO and finally you can have Singularity running on your node.

Shared home space

I wanted to be sure that the master-node and the nodes had the same shared home folder.

On the master-node (or on a separate machine):

nano /etc/exports /home 10.0.0.0/255.255.255.0(rw,sync,no_root_squash,no_subtree_check)You can adapt the network parameters to your network values.

On the clients:

sudo nano /etc/fstab10.0.0.14:/home /home nfs auto,nofail,noatime,nolock,intr,tcp,actimeo=1800 0 0Slurm

The master node of this project it’s on a virtual machine on a Proxmox instance. It requires little performances, I dedicated two CPUs and a couple of GIGs of RAM.

Before installing Slurm it’s important to install Munge and copy the key from the master-node to the processing-nodes.

sudo apt install -y libmunge-dev libmunge2 mungeFrom the master node:

scp /etc/munge/munge.key root@orange-2:/etc/munge/munge.keyFix the “munge” user values both on the master-node and the nodes, the PID should be the same in all machines:

sudo nano /etc/passwdmunge:x:501:501::/nonexistent:/usr/sbin/nologinNow you can install Slurm on every node:

sudo apt install slurm-wlm -ysudo touch /var/slurmd.pid

sudo chown slurm:slurm /var/slurmd.pidsudo mkdir /var/spool/slurm

sudo chown -R slurm:slurm /var/spool/slurm

sudo chown -R slurm:slurm /var/log/slurmNot it’s time to edit the configuration file for Slurm.

sudo nano /etc/slurm/slurm.confClusterName=oranges

ControlMachine=slurm-boss

SlurmUser=slurm

SlurmctldPort=6817

SlurmdPort=6818

AuthType=auth/munge

StateSaveLocation=/var/spool/slurmctld

SlurmdSpoolDir=/var/spool/slurm/slurmd

SwitchType=switch/none

MpiDefault=none

SlurmctldPidFile=/var/slurmctld.pid

SlurmdPidFile=/var/slurmd.pid

ProctrackType=proctrack/pgid

CacheGroups=0

ReturnToService=2

# TIMERS

SlurmctldTimeout=300

SlurmdTimeout=300

InactiveLimit=0

MinJobAge=300

KillWait=30

Waittime=0

# SCHEDULING

SchedulerType=sched/backfill

SelectType=select/cons_res

SelectTypeParameters=CR_Pack_Nodes,CR_Core_Memory

# DEFAULTS

DefMemPerCPU=1024

# LOGGING

SlurmctldDebug=3

SlurmdDebug=3

JobCompType=jobcomp/none

JobCompLoc=/tmp/slurm_job_completion.txt

SlurmdLogFile=/var/log/slurm/slurm.log

SlurmctldLogFile=/var/log/slurm/slurmctl.log

#

# ACCOUNTING

JobAcctGatherType=jobacct_gather/linux

JobAcctGatherFrequency=30

#

#AccountingStorageType=accounting_storage/slurmdbd

#AccountingStorageHost=slurm

#AccountingStorageLoc=/tmp/slurm_job_accounting.txt

#AccountingStoragePass=

#AccountingStorageUser=

#

# COMPUTE NODES

# control node

NodeName=slurm-boss NodeAddr=10.0.0.14 Port=17000 State=UNKNOWN

# compute nodes

NodeName=orange-1 RealMemory=7573 Procs=8 NodeAddr=10.0.0.101 Port=17000 State=UNKNOWN

NodeName=orange-2 RealMemory=7573 Procs=8 NodeAddr=10.0.0.102 Port=17000 State=UNKNOWN

NodeName=orange-3 RealMemory=7573 Procs=8 NodeAddr=10.0.0.103 Port=17000 State=UNKNOWN

NodeName=orange-4 RealMemory=7573 Procs=8 NodeAddr=10.0.0.104 Port=17000 State=UNKNOWN

NodeName=orange-5 RealMemory=7573 Procs=8 NodeAddr=10.0.0.105 Port=17000 State=UNKNOWN

#NodeName=guane-2 NodeAddr=192.168.1.72 Port=17003 State=UNKNOWN

# PARTITIONS

# partition name is arbitrary

PartitionName=arm Nodes=orange-[1-5] Default=YES MaxTime=8-00:00:00 State=UPTime to activate the Slurm demon on the nodes:

sudo systemctl enable slurmd

sudo systemctl start slurmdTo see if the service is running:

sudo systemctl status slurmdOn the master node run the controller:

sudo systemctl enable slurmctld

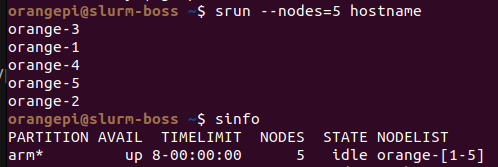

sudo systemctl start slurmctldIf everything is working fine you should be able to see all your nodes from the master:

orangepi@slurm-boss ~$ sinfo

PARTITION AVAIL TIMELIMIT NODES STATE NODELIST

arm* up 8-00:00:00 5 idle orange-[1-5]

A simple way to see if they are all responding:

orangepi@slurm-boss ~$ srun --nodes=5 hostname

orange-3

orange-1

orange-4

orange-5

orange-2